Model Inference on the Edge with Windows ML

Machine learning is helping people work more efficiently and DirectML provides the performance, conformance, and low-level control developers need to enable these experiences. Frameworks like Windows ML and ONNX Runtime layer on top of DirectML, making it easy to integrate high-performance machine learning into your application. Once the domain of science fiction, scenarios like “enhancing” an image are now possible with contextually aware algorithms that fill in pixels more intelligently than traditional image processing techniques. DxO’s DeepPRIME technology illustrates the use of neural networks to simultaneously denoise and demosaic digital images. DxO leverages Windows ML and DirectML to harness the performance and quality their users expect. [caption id="attachment_56137" align="alignnone" width="2560"] ©Jean-Charles RIVAS - Courtesy of DxO. A denoised and demosaiced image using DxO PhotoLab 4's DeepPRIME algorithm[/caption] Healthcare is another field leveraging machine learning techniques in interesting ways. Consider a sonographer using an ultrasound device to evaluate fetal brain development during their patient’s pregnancy. Acquiring the required planes and taking the required measurements for this is challenging because it requires lots of manual input from the sonographer. This is where GE Healthcare’s Voluson™ Ultrasound Devices comes in handy: pre-trained neural networks help the sonographer using the ultrasound probe automatically segment specific imaging planes from a volume and perform manual measurements. Previously identifying the approriate planes and measurements required time consuming manual adjustments. GE Healthcare relies on Windows ML and DirectML for delivering consistent and reliable results across a broad range of their ultrasound devices. [caption id="attachment_56120" align="alignnone" width="1280"]

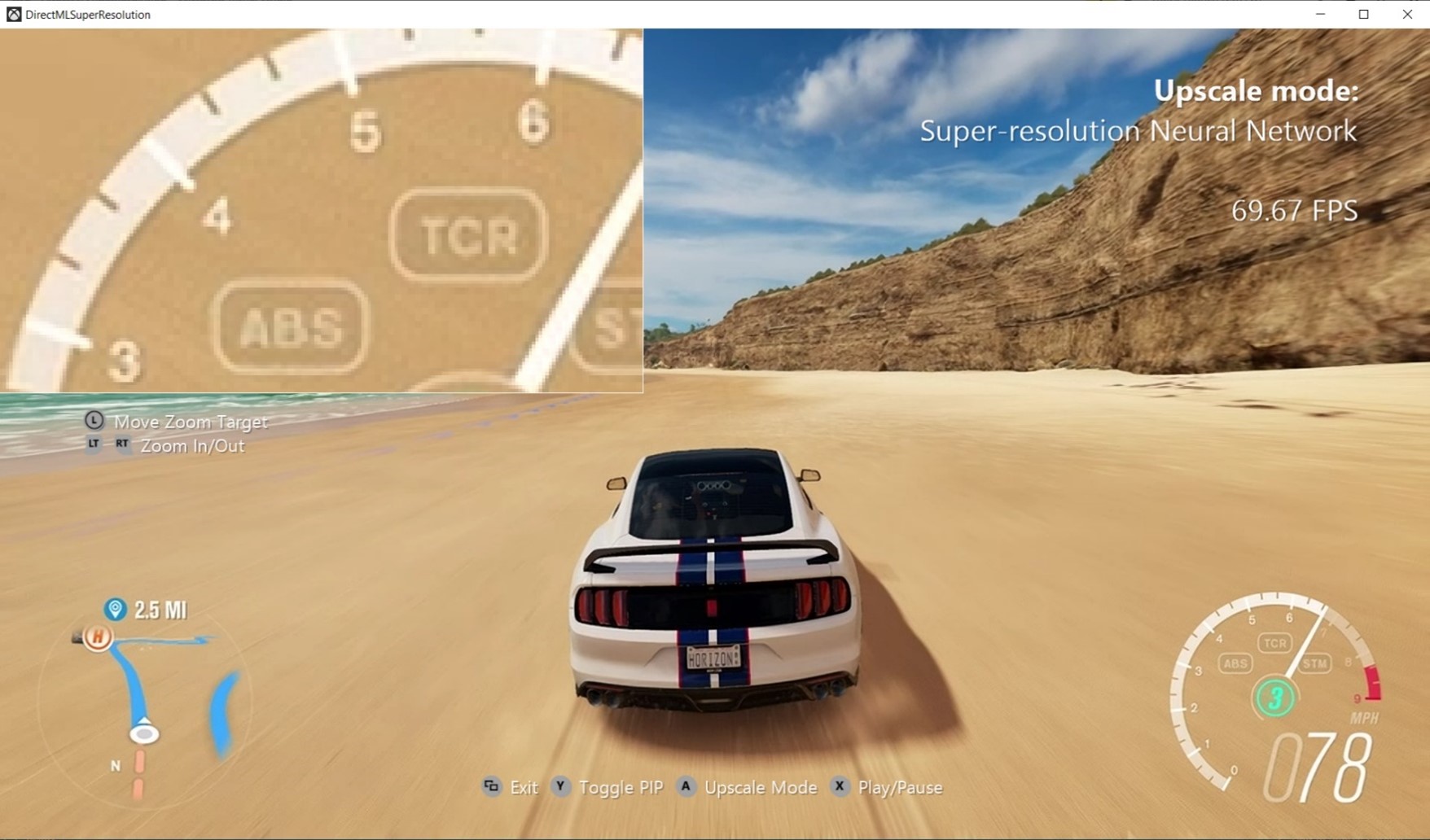

©Jean-Charles RIVAS - Courtesy of DxO. A denoised and demosaiced image using DxO PhotoLab 4's DeepPRIME algorithm[/caption] Healthcare is another field leveraging machine learning techniques in interesting ways. Consider a sonographer using an ultrasound device to evaluate fetal brain development during their patient’s pregnancy. Acquiring the required planes and taking the required measurements for this is challenging because it requires lots of manual input from the sonographer. This is where GE Healthcare’s Voluson™ Ultrasound Devices comes in handy: pre-trained neural networks help the sonographer using the ultrasound probe automatically segment specific imaging planes from a volume and perform manual measurements. Previously identifying the approriate planes and measurements required time consuming manual adjustments. GE Healthcare relies on Windows ML and DirectML for delivering consistent and reliable results across a broad range of their ultrasound devices. [caption id="attachment_56120" align="alignnone" width="1280"] GE Healthcare’s SonoCNS helping capture measurements required for fetal brain assessment.[/caption] An exciting area of growth is at the intersection of machine learning and real-time graphics in video games where performance is critical. Early applications in this area include using neural networks for superior image upscaling and filling in the sampling gaps of ray-traced images; these techniques are making it possible to present high-resolution gameplay without the cost of high-resolution rendering. The DirectML Super Resolution sample showcases how DirectML can integrate seamlessly with these real-time graphics intensive applications. [caption id="attachment_56122" align="alignnone" width="1756"]

GE Healthcare’s SonoCNS helping capture measurements required for fetal brain assessment.[/caption] An exciting area of growth is at the intersection of machine learning and real-time graphics in video games where performance is critical. Early applications in this area include using neural networks for superior image upscaling and filling in the sampling gaps of ray-traced images; these techniques are making it possible to present high-resolution gameplay without the cost of high-resolution rendering. The DirectML Super Resolution sample showcases how DirectML can integrate seamlessly with these real-time graphics intensive applications. [caption id="attachment_56122" align="alignnone" width="1756"] Example image from the DirectML Super Resolution sample.[/caption] Machine learning is a rapidly evolving field, and new applications like these are introduced every day: models are used for transcribing audio, translating hand-written notes into text, fault detection in manufacturing, and many more! DirectML has provided the hardware acceleration support needed for these scenarios since Windows 10 version 1903. Now the DirectML NuGet package offers our latest hardware acceleration investments even sooner to framework and application developers. If your model is representable using the ONNX format, then you too can tap into DirectML.

Example image from the DirectML Super Resolution sample.[/caption] Machine learning is a rapidly evolving field, and new applications like these are introduced every day: models are used for transcribing audio, translating hand-written notes into text, fault detection in manufacturing, and many more! DirectML has provided the hardware acceleration support needed for these scenarios since Windows 10 version 1903. Now the DirectML NuGet package offers our latest hardware acceleration investments even sooner to framework and application developers. If your model is representable using the ONNX format, then you too can tap into DirectML.

Training Models with TensorFlow and Lobe

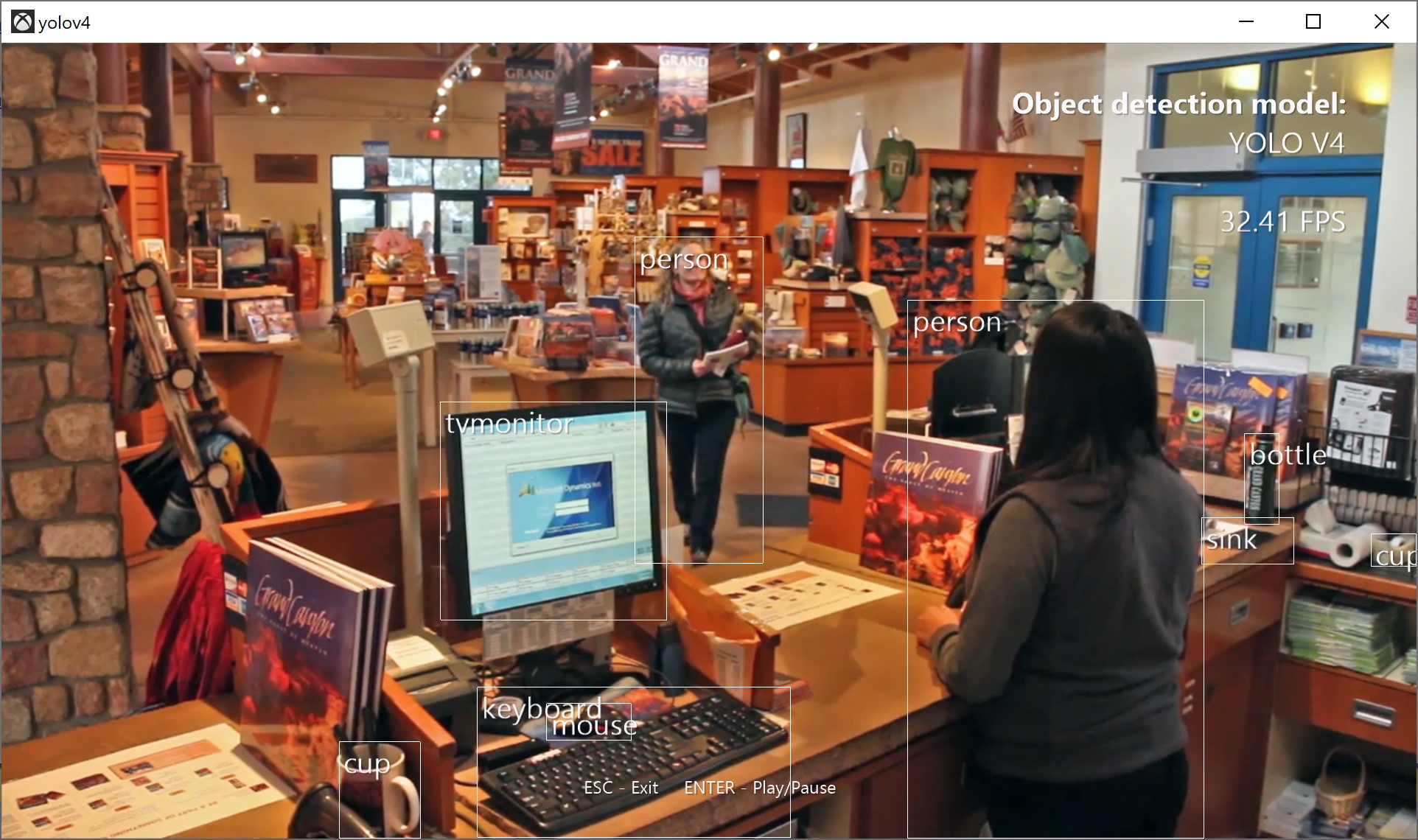

Accelerating inference is where DirectML started: supporting training workloads across the breadth of GPUs in the Windows ecosystem is the next step. In September 2020, we open sourced TensorFlow with DirectML to bring cross-vendor acceleration to the popular TensorFlow framework. This project is all about enabling rapid experimentation and training on your PC, regardless of which GPU you have on your device, with a simple and painless setup process. We also know many machine learning developers depend on tools, libraries, and containerized workloads that only work with Unix-like operating systems, so DirectML runs in both Windows and the Windows Subsystem for Linux. DirectML makes it easy for you to work with the environment and GPU you already have. [caption id="attachment_56123" align="alignnone" width="1924"] Object detection running on a video using the YOLOv4 model through TensorFlow with DirectML.[/caption] Machine learning is also becoming increasingly accessible with tools like Lobe – an easy to use app that has everything you need to bring your machine learning ideas to life. To get started, collect and label your images and Lobe will automatically train a custom machine learning model for you. On Windows, Lobe uses DirectML to deliver great performance across a wide range of GPUs. When training is done, you can try out your model and ship it to any platform you choose. [embed]https://www.youtube.com/watch?v=Mdcw3Sb98DA[/embed]

Object detection running on a video using the YOLOv4 model through TensorFlow with DirectML.[/caption] Machine learning is also becoming increasingly accessible with tools like Lobe – an easy to use app that has everything you need to bring your machine learning ideas to life. To get started, collect and label your images and Lobe will automatically train a custom machine learning model for you. On Windows, Lobe uses DirectML to deliver great performance across a wide range of GPUs. When training is done, you can try out your model and ship it to any platform you choose. [embed]https://www.youtube.com/watch?v=Mdcw3Sb98DA[/embed]

Getting Started with DirectML

If you're a developer looking to benefit from hardware accelerated machine learning through DirectML, get started today with the framework, package, or application that works best for you:

| Windows ML | ONNX Runtime with DirectML | TensorFlow with DirectML | Lobe | DirectML | |

| Use Case | The best developer experience for ONNX model inferencing on Windows. | Cross platform C API for ONNX model inferencing. | Hardware accelerated model training on any DirectX 12 GPU. | An easy to use app that has everything needed to train custom machine learning models. | Provides flexibility with direct access to DirectX 12 resources for high-performance frameworks and applications. |

| Documentation | MS Docs | GitHub | GitHub and MS Docs | Lobe.ai | GitHub and MS Docs |

| Distribution | Windows SDK or NuGet: Microsoft.AI.MachineLearning | NuGet: Microsoft.ML.OnnxRuntime.DirectML | PyPI Package: tensorflow-directml | Application: Lobe | Windows SDK or NuGet: Microsoft.AI.DirectML |

| DirectML Support | Inference | Inference | Inference and Training | Inference and Training | Inference and Training |

· DirectMLX, a new C++ library that wraps DirectML to enable easier and simpler usage, especially for combining operators into blocks or even into complete models.

· PyDirectML, a Python binding to quickly experiment with DirectML and the Python samples without writing a full C++ sample.

· Sample applications in both C++ and Python, including a full end-to-end implementation of real-time object detection using YOLOv4.

This post only scratches the surface of what’s possible with machine learning and DirectML, and we’re excited to see where developers take DirectML next! Stay tuned to the DirectML GitHub for new resources and future updates on the investments we’re making. Editor’s note – Jan. 28, 2021: The post was updated post-publication with changes to the images.via https://www.aiupnow.com

Windows AI Platform Team, Khareem Sudlow